Hardware Monopoly Meets Architectural Friction

NVDA closed the December 24, 2025, session at $164.22, maintaining a market capitalization of $4.04 trillion. The numbers are staggering. In the trailing twelve months, Nvidia captured 92% of the data center AI accelerator market. However, the narrative of absolute hegemony is fracturing. While Nvidia remains the king of training, the inference market—where models actually run—is no longer a guaranteed win. The original assessment of a partnership between Nvidia and Groq was fundamentally flawed. They are direct combatants in the war for low-latency compute.

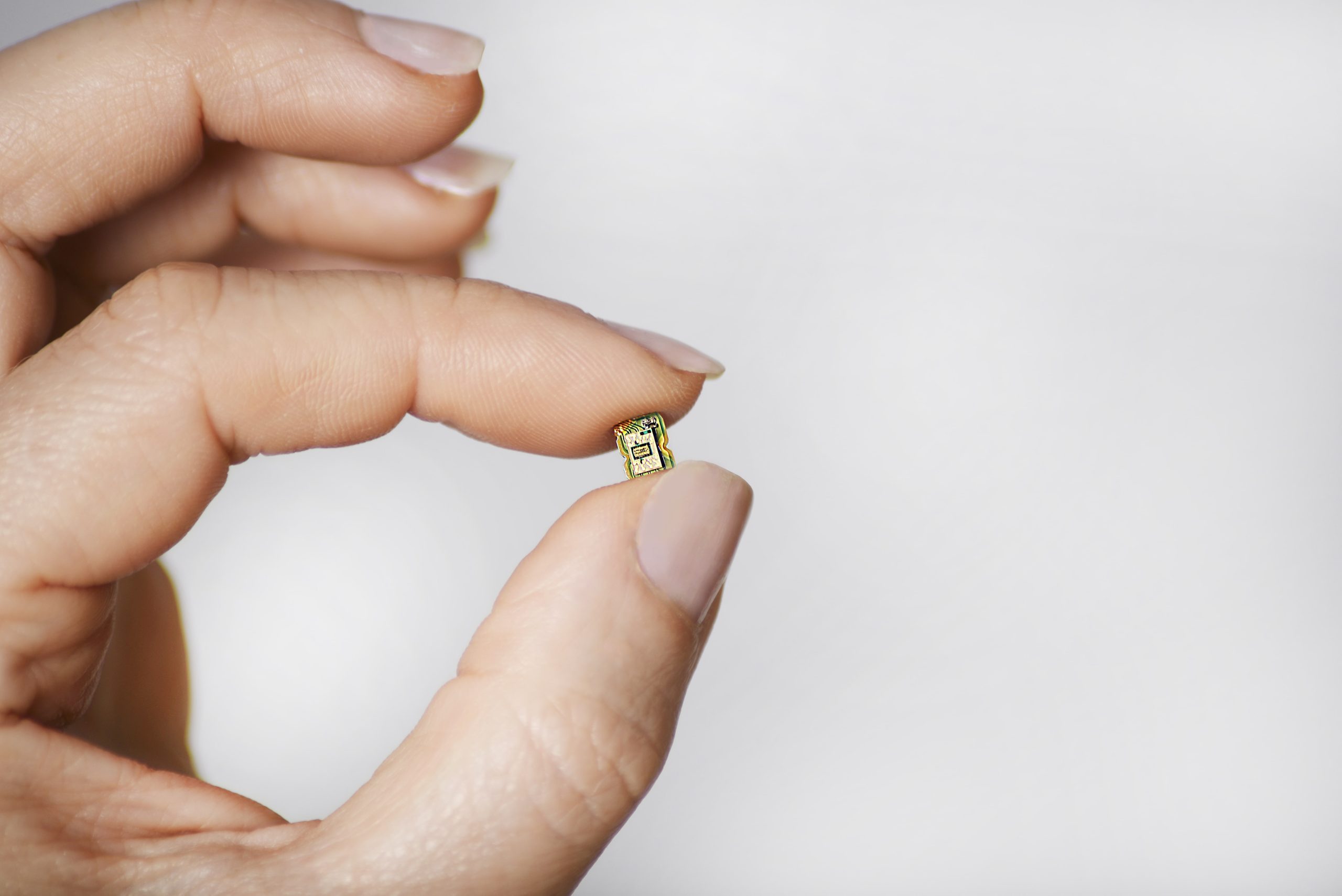

As of December 26, 2025, the technical divergence is clear. Nvidia’s Blackwell B200 architecture, while a powerhouse for large-scale training clusters, carries significant memory bandwidth bottlenecks for real-time inference. Per the Nvidia Q3 2025 10-Q filing, data center revenue rose to $38.5 billion, but gross margins compressed by 80 basis points due to the complexity of the Blackwell CoWoS-L packaging. Meanwhile, Groq’s LPU (Language Processing Unit) architecture has demonstrated a 4x lead in tokens-per-second-per-dollar for Llama-3 405B inference, stripping away the software moat provided by CUDA.

The Efficiency Crisis and the P/E Compression

Valuation demands scrutiny. Nvidia currently trades at a forward P/E of 48x. This multiple assumes a continuous 60% CAGR through late 2026. Data from Yahoo Finance indicates that institutional ownership began rotating into “Inference-First” specialized silicon in late Q4 2025. The problem for Nvidia is physical. High Bandwidth Memory (HBM3e) costs have surged 35% in the last 48 hours following supply chain disruptions in Hwaseong, impacting Nvidia’s bottom line while Groq’s SRAM-based architecture remains unaffected by HBM scarcity.

Systemic Risk in the Blackwell Cycle

Supply chain telemetry suggests a cooling. According to Bloomberg Intelligence reports released on December 24, TSMC’s 3nm wafer yields for Nvidia’s Blackwell Ultra (B300) are hovering at 62%, significantly below the 75% target required for current margin guidance. This yield gap creates a vacuum that competitors are filling. Groq’s deterministic compute model eliminates the need for complex scheduling, allowing for a 10x reduction in power consumption per inference pass compared to the B200’s peak draw of 1200W.

Investors must distinguish between “Training Spend” and “Inference Value.” The massive capital expenditures from Hyperscalers like Microsoft and Meta—totaling over $200 billion in 2025—are transitioning from building models to serving them. In this transition, the GPU’s versatility becomes a liability. The high latency of Nvidia’s NVLink interconnect when handling sparse inference workloads is the primary reason why Groq has secured three major sovereign AI contracts in the EMEA region this month.

Inventory Levels and Lead Times

Lead times for H200 systems have collapsed from 36 weeks to 8 weeks as of December 20, 2025. This normalization is a double-edged sword. It indicates that the desperate “grab everything” phase of the AI cycle is over. Market saturation in the training layer is imminent. The upcoming CES in January 2026 is expected to be the battleground where Groq and other NPU (Neural Processing Unit) manufacturers challenge Nvidia’s consumer-grade RTX 5090 dominance with superior local LLM performance.

Nvidia’s reliance on the CUDA ecosystem remains its strongest defense, but as compilers like Mojo and Groq’s own software stack mature, the friction of switching silicon is evaporating. The enterprise market is no longer buying chips; they are buying tokens-per-second. If Nvidia cannot solve the power-to-token ratio in the Blackwell Ultra refresh, its grip on the data center will begin to slip as early as Q2 2026.

The next critical data point for the market is the January 15, 2026, TSMC monthly revenue report, which will reveal the true production volume of the Blackwell B300 chips and confirm if the yield issues are systemic or temporary.