The Era of Uncontested GPU Hegemony is Ending

Nvidia faces a math problem. On October 20, 2025, the data indicates that the scarcity premium which defined the H100 cycle is evaporating. While Nvidia remains the dominant force in high performance computing, the structural integrity of its 75 plus percent gross margins is under direct assault. This is not a theoretical threat. It is a fundamental shift in the capital expenditure patterns of its four largest customers, Microsoft, Alphabet, Meta, and Amazon.

Margins are the target. According to the latest SEC filings from the hyperscaler cohort, combined annual capital expenditures for AI infrastructure have surpassed 250 billion dollars. As these costs balloon, the incentive for these firms to displace Nvidia high margin silicon with internal Application Specific Integrated Circuits, or ASICs, has become a fiduciary necessity rather than a strategic experiment. The market is no longer just buying chips. It is building them.

The Blackwell Bottleneck and Yield Realities

Blackwell is here, but it arrived with baggage. The B200 and GB200 series, based on the Blackwell architecture, were designed to deliver a 30 fold increase in performance for LLM inference workloads compared to the H100. However, the transition from the Hopper architecture has not been seamless. Industrial data from the past 48 hours indicates that while TSMC has resolved the CoWoS-L packaging yields that hindered initial mass production in mid 2025, the cost per wafer has climbed significantly.

Efficiency is the new currency. Nvidia is pushing the limits of the 4NP process node, but the thermal design power of the GB200 NVL72 rack, which reaches up to 120 kilowatts, is forcing a massive overhaul of datacenter cooling infrastructure. Per Bloomberg Market Data, the lead times for liquid cooling components have spiked by 40 percent since August. This infrastructure lag creates a natural ceiling on how fast Nvidia can convert its record breaking backlog into recognized revenue.

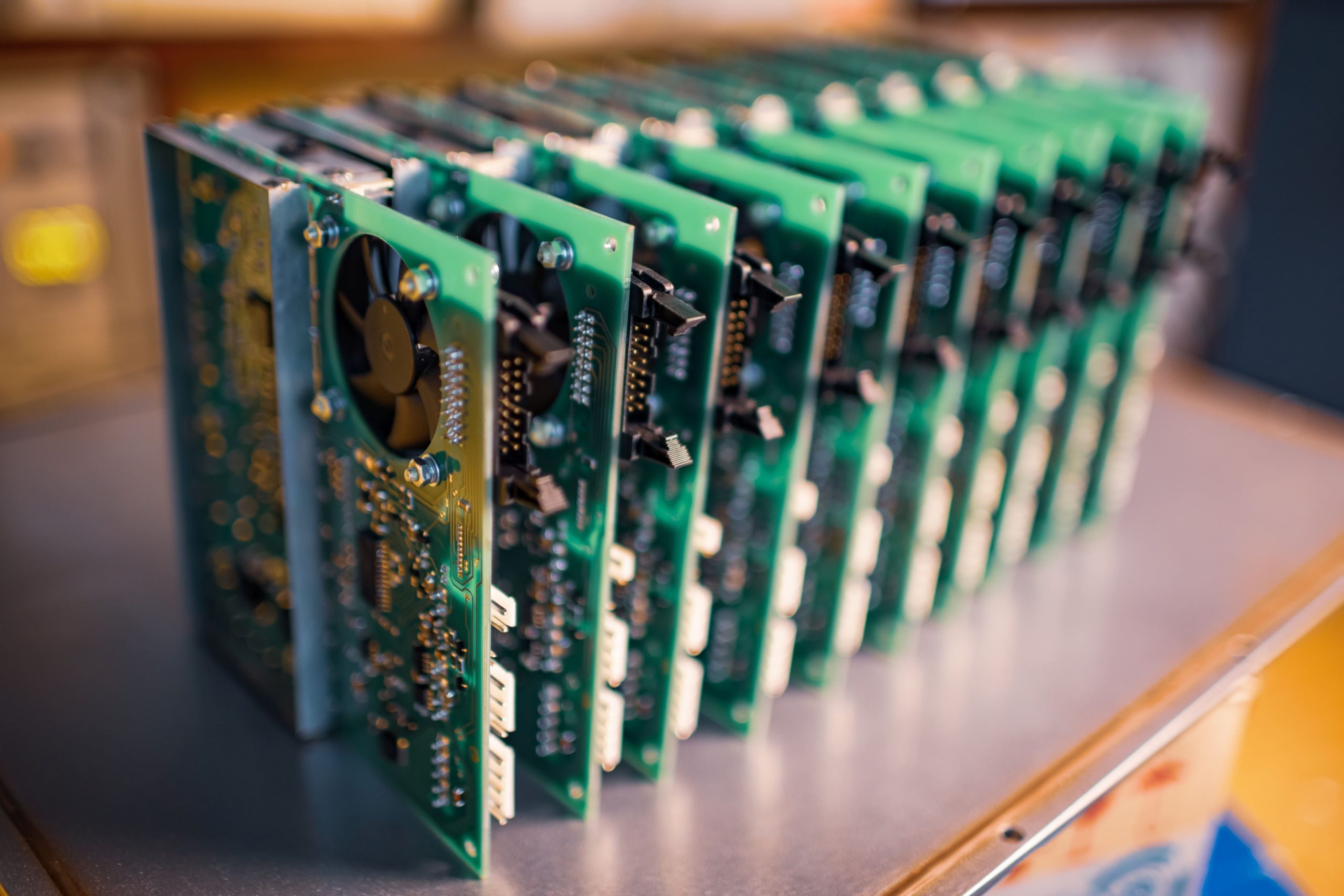

The Rise of the Internal Competitor

Internalization is the silent margin killer. Google has successfully migrated a significant portion of its Gemini training workloads to the TPU v6, which offers superior price performance for its specific internal frameworks. Simultaneously, Amazon Web Services is aggressively marketing its Trainium2 chips to third party developers, offering a 40 percent reduction in training costs compared to equivalent Nvidia instances. These are not secondary options. They are primary alternatives that cap Nvidia’s pricing power.

Software remains the last moat. Nvidia’s CUDA platform is the industry standard, but the development of open source abstractions like PyTorch and OpenAI’s Triton is making hardware backend transitions easier for developers. If the software layer becomes hardware agnostic, Nvidia’s hardware will be forced to compete on price alone. This transition is currently visible in the inference market, where the dominance of Nvidia is far more contested than in the training market.

Inventory and the Secondary Market

Supply is finally meeting demand. For the first time since the generative AI explosion began, the lead times for H200 GPUs have dropped below 10 weeks. This normalization is reflected in the secondary market prices for H100s, which have fallen 15 percent in the last quarter as companies optimize their clusters and offload excess capacity. The Yahoo Finance tech sector index shows that while Nvidia’s stock remains resilient, the volatility index for semiconductor equities is rising as the market anticipates the end of the hyper growth phase.

| Hardware Model | Architecture | Estimated ASP (USD) | Primary Bottleneck |

|---|---|---|---|

| H100 | Hopper | $25,000 – $30,000 | HBM3 Supply |

| B200 | Blackwell | $35,000 – $45,000 | CoWoS-L Yields |

| GB200 | Blackwell | $60,000 – $70,000 | Liquid Cooling Logistics |

| TPU v6 | Custom ASIC | N/A (Internal) | Software Compatibility |

Technical hurdles are shifting from silicon to systems. The challenge for Nvidia in late 2025 is no longer just making a faster chip. It is the integration of those chips into a coherent system that can survive the power and heat requirements of a modern datacenter. The GB200 NVL72 is a feat of engineering, but it requires a level of facility customization that many tier 2 and tier 3 providers cannot afford. This creates a concentration risk where Nvidia becomes increasingly dependent on a handful of massive buyers who have the most leverage to negotiate lower prices.

The upcoming milestone to watch is the January 2026 CES keynote, where Nvidia is expected to reveal the final production specifications for the Rubin platform. Unlike the Blackwell transition, the Rubin platform will rely on the 3nm or 2nm nodes and HBM4 memory. Investors must track whether the initial orders for Rubin reflect a continued expansion of compute demand or a defensive replacement cycle as older H100 clusters reach their three year depreciation limit.